Add coq-gpt blog post

This commit is contained in:

parent

d78b47f190

commit

e56d9f09a4

@ -0,0 +1,154 @@

|

|||||||

|

---

|

||||||

|

title: Adventures in AI-assisted proof generation

|

||||||

|

slug: Adventures-in-AI-assisted-proof-generation

|

||||||

|

date: 2023-03-20 00:08:00

|

||||||

|

tags:

|

||||||

|

- chatgpt

|

||||||

|

- languages

|

||||||

|

- theory

|

||||||

|

---

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

Look, I know LLMs and ChatGPT are the big industry-consuming buzz-words right now. I'm as skeptical of the "ChatGPT is going to replace `{industry}`" mentality as anyone, but it's clear that _something_ is coming and it's going to change the world, whether we like it or not.

|

||||||

|

|

||||||

|

Believe it or not, until a couple days ago, I had largely ignored the LLM craze. Sure, it's cool, and it's coming, but it's not relevant to what I do day-to-day _yet_. Recently, however, I was reading the excellent [Web Curios blog by Matt Muir](https://webcurios.co.uk/webcurios-17-03-23/), and something he said stood out to me:

|

||||||

|

|

||||||

|

> “But Matt!”, I hear you all cry, “what does this MEAN? Please tell me what I should think about all this breathless, breakneck upheaval and change and…is it…progress?” To which the obvious answer is “lol like I know, I’m just some webmong” (and also “is it normal to hear these voices?”), but to which I might also say “This is the point at which I strongly believe it’s important you start to learn how to use this stuff, because I reckon you’ve got maximum a year in which reasonable competence with this sort of kit makes you look genuinely smart”, but also “GPT4 is literally Wegovy for white collar office workers, insofar as everyone will be using it to give themselves a professional tweakup but people will be a bit cagey about admitting it”

|

||||||

|

|

||||||

|

So, begrudgingly, I fired up OpenAI, handed over my e-mail and phone number, and started playing around with ChatGPT. I tried all the normal things ("generate a Bash script to do X", "write a new cold open for X show in the style of Y"), but I stumbled upon something interesting, rather by accident.

|

||||||

|

|

||||||

|

## ~~The~~ A Problem With Coq

|

||||||

|

|

||||||

|

(If you are familiar with Coq/provers, feel free to skip my basic and somewhat controversial explanation in this section.)

|

||||||

|

|

||||||

|

When I was in school, one of my areas of focus was formal methods, a computing discipline that concerns itself with the rigorous theory of programming languages and programs. One of the major applications of FM is so-called "verified computing" -- computing which has been formally proven correct. Applications of verified computing are legion, including security-sensitive environments -- like embedded devices, drones, and spacecraft -- but have gradually extended to broader applications like secure boot and mainstream languages like TypeScript.

|

||||||

|

|

||||||

|

The FM community has coalesced around a series of tools called interactive theorem provers which provide syntax for modeling theorems, composing them, and constructing proofs over them. The lab I worked with used one of the most popular, the [Coq Proof Assistant](https://coq.inria.fr/). Coq contains a rigid, functional programming language and a less-rigid proof scripting language which operates over it.

|

||||||

|

|

||||||

|

Despite advocates claims that provers are ["going mainstream any year now,"](http://adam.chlipala.net/) these proof assistants struggle in general -- and Coq in particular -- with a lack of automation. Quite extensive knowledge is required to prove even [basic lemmas](https://stackoverflow.com/questions/48416538/writing-proofs-of-simple-arithmetic-in-coq), largely due to the strict nature of the runtime and its unwillingness to make assumptions which may turn out to be logically inconsistent. Undoubtedly, this will improve with time as automations and layers of abstraction are introduced to the system, but it remains the largest barrier to entry for programmers wanting to write provably-correct code.

|

||||||

|

|

||||||

|

## ~~The~~ A Problem with ChatGPT

|

||||||

|

|

||||||

|

Of the many [technical](https://www.forbes.com/sites/bernardmarr/2023/03/03/the-top-10-limitations-of-chatgpt), [societal](https://www.theguardian.com/technology/2023/mar/18/chatgpt-said-i-did-not-exist-how-artists-and-writers-are-fighting-back-against-ai), and [moral](https://www.scu.edu/ethics-spotlight/generative-ai-ethics/a-moral-panic-chatgpt-and-the-gamification-of-education/) quandaries spurred by ChatGPT is its complete willingness to fabricate lies disguised as the truth. Because all the LLM is doing is generating what it deems the statistically most likely response, it may be incorrect. However, its advanced language _syntax_ model enables it to present its incorrect information in an eloquent, tidy, convincing format (the boon of misinformation farms, but I digress...).

|

||||||

|

|

||||||

|

Obviously proving whether or not the output of ChatGPT is correct _generally_ is an insane and likely impossible proposition, there's an interesting rabbit hole to be found on the boundary of AI-generated code snippets and Coq proofs. A wedding between the embodiment of industry buzzwords and something which is decidedly not. You see, it turns out that one of the niche languages that ChatGPT understands reasonably well is Ltac, the proof scripting language built into Coq.

|

||||||

|

|

||||||

|

## Factorial

|

||||||

|

|

||||||

|

A common baby's-second-proof in Coq is the factorial problem. Model the factorial function as a recursive function and an inductive relation, then prove that the two models are equivalent. So, I started by asking ChatGPT:

|

||||||

|

|

||||||

|

> "Show me an implementation of the factorial algorithm in Coq."

|

||||||

|

|

||||||

|

This is what it came up with:

|

||||||

|

|

||||||

|

```text

|

||||||

|

Fixpoint factorial (n : nat) : nat :=

|

||||||

|

match n with

|

||||||

|

| O => 1

|

||||||

|

| S n' => n * factorial n'

|

||||||

|

end.

|

||||||

|

```

|

||||||

|

|

||||||

|

This compiled in Coq and, upon inspection, this appears correct. Impressive. Next, I asked it something a bit more challenging:

|

||||||

|

|

||||||

|

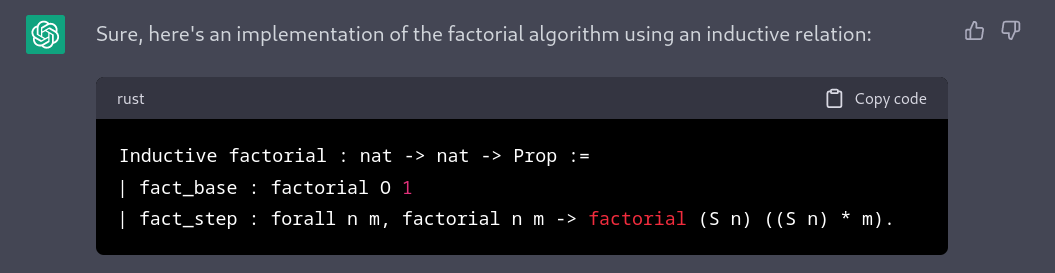

> "Now, show me an implementation of the factorial algorithm in Coq using an inductive relation instead of a recursive function."

|

||||||

|

|

||||||

|

```text

|

||||||

|

Inductive factorial : nat -> nat -> Prop :=

|

||||||

|

| fact_base : factorial O 1

|

||||||

|

| fact_step : forall n m, factorial n m -> factorial (S n) ((S n) * m).

|

||||||

|

```

|

||||||

|

|

||||||

|

This also compiled fine, and I think this is much more impressive. The LLM produced a reasonable model of a factorial system, "inferring" what I meant by "inductive relation." ChatGPT also helpfully provided an example lemma for this relation:

|

||||||

|

|

||||||

|

```text

|

||||||

|

Example factorial_5 : factorial 5 120.

|

||||||

|

Proof.

|

||||||

|

apply (fact_step 4 24).

|

||||||

|

apply (fact_step 3 6).

|

||||||

|

apply (fact_step 2 2).

|

||||||

|

apply (fact_step 1 1).

|

||||||

|

apply fact_base.

|

||||||

|

Qed.

|

||||||

|

```

|

||||||

|

|

||||||

|

This is where things started to get interesting to me. I didn't even ask for an example, but in its quest to explain itself, ChatGPT generated Ltac code demonstrating an example usage of its inductive relation. I was curious, so I chucked it into [Coqtail](https://github.com/whonore/Coqtail) and tried it out:

|

||||||

|

|

||||||

|

```text

|

||||||

|

Unable to unify "factorial 0 1" with "factorial 1 1".

|

||||||

|

```

|

||||||

|

|

||||||

|

Hm. It looks like the ChatGPT proof missed a step between `apply (fact_step 1 1).` and `apply fact_base.` Adding in an `apply (fact_step O 1).` between them pops it out.

|

||||||

|

|

||||||

|

After my initial doubts in ChatGPT's "knowledge" of Coq, I started to get a little more ambitious:

|

||||||

|

|

||||||

|

> "Now, show me a proof that the inductive relation is equivalent to the recursive function definition."

|

||||||

|

|

||||||

|

```text

|

||||||

|

Lemma factorial_equiv : forall n, factorial n (factorial_rec n).

|

||||||

|

Proof.

|

||||||

|

induction n.

|

||||||

|

- apply fact_base.

|

||||||

|

- simpl.

|

||||||

|

apply (fact_step n (factorial_rec n)).

|

||||||

|

apply IHn.

|

||||||

|

Qed.

|

||||||

|

|

||||||

|

Lemma factorial_rec_equiv : forall n, factorial_rec n = factorial n.

|

||||||

|

Proof.

|

||||||

|

intros n.

|

||||||

|

unfold factorial_rec.

|

||||||

|

induction n.

|

||||||

|

- reflexivity.

|

||||||

|

- simpl.

|

||||||

|

rewrite IHn.

|

||||||

|

apply (fact_step n (factorial n)).

|

||||||

|

apply factorial_equiv.

|

||||||

|

Qed.

|

||||||

|

```

|

||||||

|

|

||||||

|

Now, that's something! There are so many interesting things about this response. First of all, it inferred that a "proof of equivalence" would require a lemma in both directions. Second of all, the Ltac it generated has _structure_ and is organized using bullets, not just a large blob of proof commands. Even more, it was able to account for the conflict between the recursive `factorial` function it generated and the inductive `factorial` relation it generated, renaming the recursive function `factorial_rec`.

|

||||||

|

|

||||||

|

To my amazement, the `factorial_equiv` popped out without any modifications, and the bullets structure even made sense. The `factorial_rec_equiv` lemma however... has some problems. For one, neither `factorial_rec` nor `factorial` is single-parameter, so the lemma was missing an entire universal variable. I rewrote the theorem manually, to give the LLM a hint:

|

||||||

|

|

||||||

|

> "Modify the `factorial_req_equiv` lemma to prove: `forall n m, factorial_rec n = m -> factorial n m"`

|

||||||

|

|

||||||

|

```text

|

||||||

|

Lemma factorial_rec_equiv : forall n m, factorial_rec n = m -> factorial n m.

|

||||||

|

Proof.

|

||||||

|

intros n m H.

|

||||||

|

rewrite <- H.

|

||||||

|

clear H.

|

||||||

|

induction n.

|

||||||

|

- apply fact_base.

|

||||||

|

- simpl.

|

||||||

|

apply (fact_step n (factorial_rec n)).

|

||||||

|

apply IHn.

|

||||||

|

reflexivity.

|

||||||

|

Qed.

|

||||||

|

```

|

||||||

|

|

||||||

|

Aside from the fact that the last `reflexivity` is not needed, this theorem popped out perfectly. Now, I not only had an AI-generated factorial relation, but I had an AI-generated proof that that relation was correct (or at least equivalent to the recursive definition I defined as "correct").

|

||||||

|

|

||||||

|

This is absolutely amazing to me, but of course there are caveats to note:

|

||||||

|

|

||||||

|

- First, this is an exceedingly simple example, and as you add libraries, lemmas, and other code it would become difficult to provide ChatGPT enough context for it to generate coherent results.

|

||||||

|

- Second, ChatGPT's generation of these outputs was _slow_. So slow, in fact, that several times when I returned to the tab from something else, it had timed-out and needed to be retried. But, it did finish.

|

||||||

|

- Third, obviously my definition of "proven correctness" assumes logical consistency in the proof engine, but in this line of work that's a fair assumption to make.

|

||||||

|

|

||||||

|

Importantly, however, were I proving this for the first time, it would probably take _more_ time to work my way through the various proof tactics and commands.

|

||||||

|

|

||||||

|

I think this has a few interesting implications: first, for theorem proving. The barrier to entry for theorem provers is largely an issue with how difficult it is to construct proof tactics. If ChatGPT generates a string of proof tactics which pops out a proof goal, then one of two things has happened. Either, (1) it has found a set of tactics which genuinely proves the statement, or (2) it has found a logical inconsistency -- a bug -- in the proof engine.

|

||||||

|

|

||||||

|

The latter is the stuff of nightmares, and is generally not much of an issue in mature tools like Coq. The former, however, is really cool. Imagine a utopic world where you can hand a proof and a universe of statements to your theorem prover and, rather than requiring carefully constructed hints, [the `auto` tactic](https://coq.inria.fr/refman/proofs/automatic-tactics/auto.html) could use an LLM to generate the tactics for the proof. Even if it fails, it provides the user a much closer starting point than writing the proof from scratch.

|

||||||

|

|

||||||

|

There's a much broader and more interesting implication here, though. One problem with ChatGPT-generated code is that it may simply be completely wrong. So, what if we make ChatGPT _prove_ that its code is correct? This, combined with Coq's [excellent extractors](https://coq.inria.fr/refman/addendum/extraction.html), could free up not just FM people, but even normal developers to create verified implementations while spending less time in the weeds with the prover. `auto` on crack.

|

||||||

|

|

||||||

|

## Conclusion?

|

||||||

|

|

||||||

|

I honestly don't know how I feel about ChatGPT in general, or even how it behaves in this particular situation. Maybe it simply does not scale to the complexity necessary to be useful. Maybe a rise in AI-generated Ltac will poison [yet another vibrant FOSS community](https://joemorrison.medium.com/death-of-an-open-source-business-model-62bc227a7e9b). It really feels like there's a social reckoning on the way.

|

||||||

|

|

||||||

|

Regardless, it seems like ChatGPT's usefulness as a programming tool may extend -- just a bit -- to the world of verified programming.

|

||||||

|

|

||||||

|

(I [created a repo](https://code.garrettmills.dev/garrettmills/blog-chatgpt-coq) where you can find the generated Coq code as well as the output from my ChatGPT session.)

|

||||||

Loading…

Reference in New Issue

Block a user