Add blog post

This commit is contained in:

parent

7d5f5fc0d6

commit

b4d50a6178

@ -0,0 +1,126 @@

|

|||||||

|

---

|

||||||

|

title: Salvaging a Corrupted Table from PostgreSQL

|

||||||

|

slug: Salvaging-a-Corrupted-Table-from-PostgreSQL

|

||||||

|

date: 2025-01-11 20:00:00

|

||||||

|

tags:

|

||||||

|

- linux

|

||||||

|

- hosting

|

||||||

|

- postgres

|

||||||

|

---

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

> ⚠️ <b>DO NOT DO THIS...</b> well ever really, but especially on a server with failing disks. This is done on a server with perfectly fine disks, but corrupted Postgres blocks.

|

||||||

|

|

||||||

|

I spend a lot of time in my professional work and my home lab trying to learn and implement the “correct” or “responsible” way of implementing a solution — highly-available deployments, automated and tested backups, infrastructure-as-code, &c.

|

||||||

|

|

||||||

|

This is not that.

|

||||||

|

|

||||||

|

This is a very dirty, no-holds-barred, absolutely insane thing to do, and if you’re working in any kind of environment that _matters_, you should [read this](https://wiki.postgresql.org/wiki/Corruption) and hire a professional.

|

||||||

|

|

||||||

|

For unimportant reasons, I’ve been dealing with data corruption on the Postgres server in my home lab. The server was terminated uncleanly a couple times and the disk data was corrupted. Because there’s nothing more permanent than a temporary solution, I did not have backups for this server.

|

||||||

|

|

||||||

|

For most of the data, I was able to use `pg_dump` to dump the schemata and data and re-import it into my new Postgres server (which, yes, has backups configured now).

|

||||||

|

|

||||||

|

```none

|

||||||

|

pg_dump -U postgres -h localhost my_database > my_database.sql

|

||||||

|

```

|

||||||

|

|

||||||

|

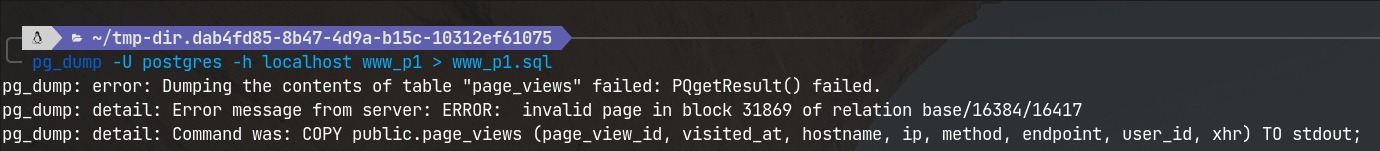

For databases with corrupted tables, though, `pg_dump` fails out with this unsettling error:

|

||||||

|

|

||||||

|

```none

|

||||||

|

> pg_dump -U postgres -h localhost www_p1 > www_p1.sql

|

||||||

|

pg_dump: error: Dumping the contents of table "page_views" failed: PQgetResult() failed.

|

||||||

|

pg_dump: detail: Error message from server: ERROR: invalid page in block 31869 of relation base/16384/16417

|

||||||

|

pg_dump: detail: Command was: COPY public.page_views (page_view_id, visited_at, hostname, ip, method, endpoint, user_id, xhr) TO stdout;

|

||||||

|

```

|

||||||

|

|

||||||

|

(…yes, that’s the database for my personal website. 👀) Somewhat to my surprise, I couldn’t find many details/strategies for how to “best effort” recover data from a corrupt Postgres table, so here we go.

|

||||||

|

|

||||||

|

Luckily, since the corruption was the result of unclean Postgres exits and not bad physical disks, it only affected table(s) with frequent writes at the time. In this case, that was the `sessions` table and the `page_views` table. The `sessions` table is entirely disposable — I just re-created it empty on the new server and moved on with my life.

|

||||||

|

|

||||||

|

It wouldn’t be the end of the world if I lost the `page_views` table, but there are some 6.5 million historical page-views recorded in that table that would kind of suck to lose. So… let’s do some sketchy shit.

|

||||||

|

|

||||||

|

My goal here isn’t to recover the entire table. If that was the goal, I would’ve stopped and hired a professional. Instead, my goal is to recover as many rows of the table as possible.

|

||||||

|

|

||||||

|

One reason `pg_dump` fails is because it tries to read the data using a cursor, which fails when the fundamental assumptions of Postgres are violated (e.g. bad data in disk blocks, invalid indices).

|

||||||

|

|

||||||

|

My strategy here is to create a 2nd table on the bad server with the same schema, then loop over each row in the `page_views` individually and insert them into the clean table, skipping rows in disk blocks with bad data. Shout out to [this Stack Overflow answer](https://stackoverflow.com/a/63905054/4971138) that loosely inspired this strategy.

|

||||||

|

|

||||||

|

```sql

|

||||||

|

CREATE OR REPLACE PROCEDURE pg_recover_proc()

|

||||||

|

LANGUAGE plpgsql AS $$

|

||||||

|

DECLARE

|

||||||

|

cnt BIGINT := 0;

|

||||||

|

BEGIN

|

||||||

|

-- Get the maximum page_view_id from the page_views table

|

||||||

|

cnt := (SELECT MAX(page_view_id) FROM page_views);

|

||||||

|

|

||||||

|

-- Loop through the page_views table in reverse order by page_view_id

|

||||||

|

LOOP

|

||||||

|

BEGIN

|

||||||

|

-- Insert the row with the current page_view_id into page_views_recovery

|

||||||

|

INSERT INTO page_views_recovery

|

||||||

|

SELECT * FROM page_views WHERE page_view_id = cnt and entrypoint is not null;

|

||||||

|

|

||||||

|

-- Decrement the counter

|

||||||

|

cnt := cnt - 1;

|

||||||

|

|

||||||

|

-- Exit the loop when cnt < 1

|

||||||

|

EXIT WHEN cnt < 1;

|

||||||

|

EXCEPTION

|

||||||

|

WHEN OTHERS THEN

|

||||||

|

-- Handle exceptions (e.g., data corruption)

|

||||||

|

IF POSITION('block' in SQLERRM) > 0 OR POSITION('status of transaction' in SQLERRM) > 0 OR POSITION('memory alloc' in SQLERRM) > 0 OR POSITION('data is corrupt' in SQLERRM) > 0 OR POSITION('MultiXactId' in SQLERRM) > 0 THEN

|

||||||

|

RAISE WARNING 'PGR_SKIP: %', cnt;

|

||||||

|

cnt := cnt - 1;

|

||||||

|

CONTINUE;

|

||||||

|

ELSE

|

||||||

|

RAISE;

|

||||||

|

END IF;

|

||||||

|

END;

|

||||||

|

|

||||||

|

IF MOD(cnt, 500) = 0 THEN

|

||||||

|

RAISE WARNING 'PGR_COMMIT: %', cnt;

|

||||||

|

COMMIT;

|

||||||

|

END IF;

|

||||||

|

END LOOP;

|

||||||

|

END;

|

||||||

|

$$;

|

||||||

|

```

|

||||||

|

|

||||||

|

There are some cool and absolutely terrible things here. In modern versions of Postgres, stored procedures can periodically commit their in-progress top-level transactions by calling `COMMIT` repeatedly. I’m (ab)using this here to flush the recovered rows to the new table as the procedure runs in case it fails partway through.

|

||||||

|

|

||||||

|

I'm doing some rough string analysis for error messages related to corrupt data and skipping the current row if that's the case. Another interesting edge-case: a couple times, I ran into a case where the `INSERT` into the recovery table failed because the `SELECT` query against the bad table was returning `null` values, even though that should technically never be possible. Told you we're violating some foundational assumptions about Postgres here. Adding an `is not null` to a different non-null column helped avoid this.

|

||||||

|

|

||||||

|

My original draft of this procedure was designed to keep looping and just skip the fatal errors caused by disk corruption (the various dirty `POSITION` checks in the error handler).

|

||||||

|

|

||||||

|

Quickly, however, I ran into a new error:

|

||||||

|

|

||||||

|

> SQL Error \[57P03\]: FATAL: the database system is in recovery mode

|

||||||

|

|

||||||

|

Turns out, if you keep intentionally forcing Postgres to try to read data from bad disk blocks, eventually its internal data structures hit an inconsistent state and the server process restarts itself out for safety.

|

||||||

|

|

||||||

|

This is (obviously) a problem because we can’t catch that and force the procedure to keep running against its will. So instead I resorted to adding `IF` conditions to manually skip over primary key regions that caused the server process to crash. (I told you this was crazy.)

|

||||||

|

|

||||||

|

Every time the server would crash, I would dump out the rows I’d recovered so far, just in case:

|

||||||

|

|

||||||

|

```none

|

||||||

|

pg_dump -U postgres -h localhost --table page_views2 www_p1 > page_views2-1.sql

|

||||||

|

```

|

||||||

|

|

||||||

|

Then I’d skip a new region of primary key, drop and re-create the recovery table, and try again. Why drop and re-create it? Because I discovered that when the server process crashed, it would occasionally write bad data to the *recovery* table, which is obviously no good:

|

||||||

|

|

||||||

|

```none

|

||||||

|

pg_dump: error: Dumping the contents of table "page_views_recovery" failed: PQgetResult() failed.

|

||||||

|

pg_dump: detail: Error message from server: ERROR: invalid memory alloc request size 18446744073709551613

|

||||||

|

pg_dump: detail: Command was: COPY public.page_views_recovery (page_view_id, visited_at, hostname, ip, method, endpoint, user_id, xhr) TO stdout;

|

||||||

|

```

|

||||||

|

|

||||||

|

Predictably, this got really annoying to do by hand, so I did what any good Linux nerd would do and wrote a script for it, which [you can find here](https://code.garrettmills.dev/garrettmills/pg-recover). The gist:

|

||||||

|

|

||||||

|

```none

|

||||||

|

./pg-recover.sh postgres localhost www_p1 page_views page_view_id entrypoint

|

||||||

|

```

|

||||||

|

|

||||||

|

Of the 6,628,903 rows in the corrupt table, I was able to recover 6,444,118 of them. You know what they say — if it’s stupid and it works, it’s still stupid and you’re just lucky.

|

||||||

Loading…

Reference in New Issue

Block a user